Using LLMs with dLocal

Boost your dLocal integration workflow with LLMs.

Agentic integrated development environments (agentic IDEs) transform software development by directly embedding AI-powered assistance into coding workflows. You can use them to streamline your dLocal integration in various ways.

We offer tools and best practices to help you make the most of this new development approach.

LLM features

These tools improve how AI assistants interact with our documentation, helping you quickly access relevant content and streamline routine tasks.

Use cases

These tools support different parts of your workflow, whether building tools, writing scripts, or looking for quick answers.

| Feature | Description | Benefit / Use |

|---|---|---|

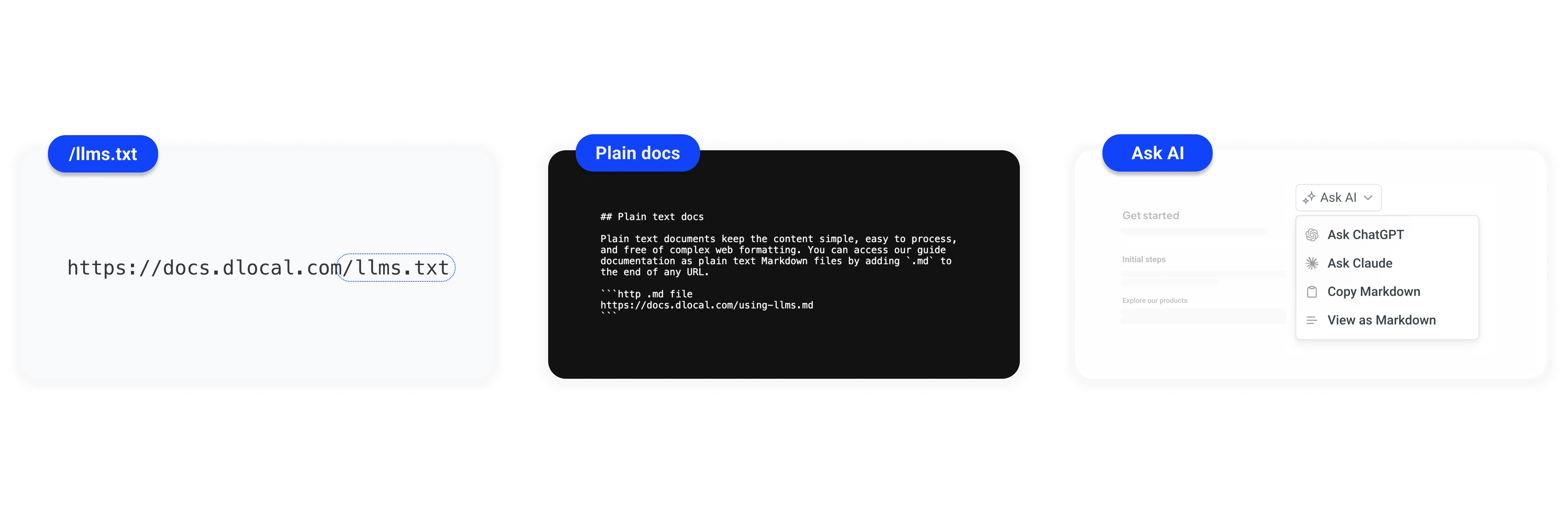

Plain text (llms.txt) | A plain text file located at the site's root instructs LLMs on reading and processing the documentation. | Provide AI models with clear instructions on how to parse and prioritize your documentation content effectively. |

Plain docs (.md) | Documentation pages are available in raw Markdown format by adding .md to the URL. | Copy and reuse documentation content, process pages in scripts or tools without HTML clutter. |

| Ask AI | Each page has a dropdown menu that links to AI assistants (like ChatGPT and Claude) and offers utilities for easy content use. | Send content to your preferred AI tool quickly and speed up your workflow with fewer clicks. |

Setup and resources

Find everything you need to make LLMs work better with your dLocal integration.

LLMs text file location

The /llms.txt file follows a developing standard that helps websites guide LLMs on handling their content. You can find the /llms.txt file at the root of the site:

https://docs.dlocal.com/llms.txt

Plain text docs

Plain text documents keep the content simple, easy to process, and free of complex web formatting. You can access our guide documentation as plain text Markdown files by adding .md to the end of any URL.

https://docs.dlocal.com/docs/using-llms.md

Ask AI

The Ask AI dropdown appears as a menu icon in the guides.

It links to AI assistants (ChatGPT and Claude) that can explain concepts, answer questions, or generate code examples based on the page content.

It also includes handy tools for working with AI-generated content, such as Copy to Clipboard and View as Markdown.

dLocal Model Context Protocol (MCP) Server

Model Context Protocol helps organize and manage the correct information to language models (LLMs) so that they work correctly in specific situations. It allows us to connect AI-powered tools (like custom assistants or IDEs) to our APIs and services.

The dLocal MCP server acts as a bridge between your AI agent and dLocal’s systems. It provides a standardized way for agents to search internal knowledge bases (like docs, support articles, and FAQs).

Remote server

We provide a remote MCP server, available at:

https://mcp.dlocal.com/integration

Step by step

To connect to dLocal MCP server, follow the steps below using your preferred MCP client:

Step 1: Edit your MCP Client configuration

Open the configuration file for your MCP client in a text editor. Replace its contents with the following:

{

"servers": {

"dlocal-integration-mcp": {

"url": "https://mcp.dlocal.com/integration",

"transport": "http",

"headers": {

"x-merchant-id": "{YOUR_MERCHANT_ID}"

}

}

}

}

Merchant context

Use the x-merchant-id HTTP header to specify the MID of the account in MCP Server requests.

To find the MID, log in to the Merchant Dashboard. The ID is displayed next to the account name in the top-left corner of the screen. If you have multiple accounts, make sure to select the correct one first.

This header is optional but recommended. By providing this information, we can improve your experience and deliver more relevant, customized results.

Client-specific instructions

Follow the steps below based on the MCP client you're using.

Integration with Visual Studio Code (VSC) Copilot

Create an mcp.json file in your project root with the following configuration:

{

"servers": {

"dlocal-integration-mcp": {

"url": "https://mcp.dlocal.com/integration",

"transport": "http",

"headers": {

"x-merchant-id": "{YOUR_MERCHANT_ID}"

}

}

}

}

If the mcp.json file is present in your project, the MCP server will be automatically detected.

You can verify the connection by checking the Copilot status in the Visual Studio Code (VSC) status bar.

Integration with Claude Desktop

- Open Claude Desktop and go to Preferences > MCP Settings

- Paste the following configuration:

{

"servers": {

"dlocal-integration-mcp": {

"url": "https://mcp.dlocal.com/integration",

"transport": "http",

"headers": {

"x-merchant-id": "{YOUR_MERCHANT_ID}"

}

}

}

}

- Click Save and restart Claude Desktop.

The MCP server will be automatically detected and connected.

Integration with Cursor

- Open the Settings from the gear icon in the top-right corner.

- In the Cursor Settings menu, go to the MCP tab.

- Click + Add new global MCP server and paste the following configuration:

{

"servers": {

"dlocal-integration-mcp": {

"url": "https://mcp.dlocal.com/integration",

"transport": "http",

"headers": {

"x-merchant-id": "{YOUR_MERCHANT_ID}"

}

}

}

}

- Click Save to add the server.

The MCP server will be automatically detected and connected.

You can verify the connection by checking the MCP status in the Cursor status bar.

Integration with Windsurf

- Open Windsurf and go to Settings > MCP Configuration.

- Paste the following configuration:

{

"servers": {

"dlocal-integration-mcp": {

"url": "https://mcp.dlocal.com/integration",

"transport": "http",

"headers": {

"x-merchant-id": "{YOUR_MERCHANT_ID}"

}

}

}

}

- Click Save and restart Windsurf.

The MCP server will be automatically detected.

You can verify the connection in the bottom status bar.

Step 2: Save and restart the client

After updating the configuration, save the file and restart your MCP client.

Step 3: Test the connection

To verify the integration is working correctly, ask the MCP client to perform a supported action.

Updated 5 months ago